Naukri Webscraper¶

Quick Summary

Naukri Webscraper

A Python tool that automates job searches on Naukri.com, enabling users to filter listings by skills and export structured data for analysis.

- Context:

Personal Project,Mar 2024,Python,Selenium,Pandas - Role: Sole developer—designed, implemented, tested, and documented the project

- Impact: Automated skill-based job search and CSV export, validated by automated tests and real-world data extraction

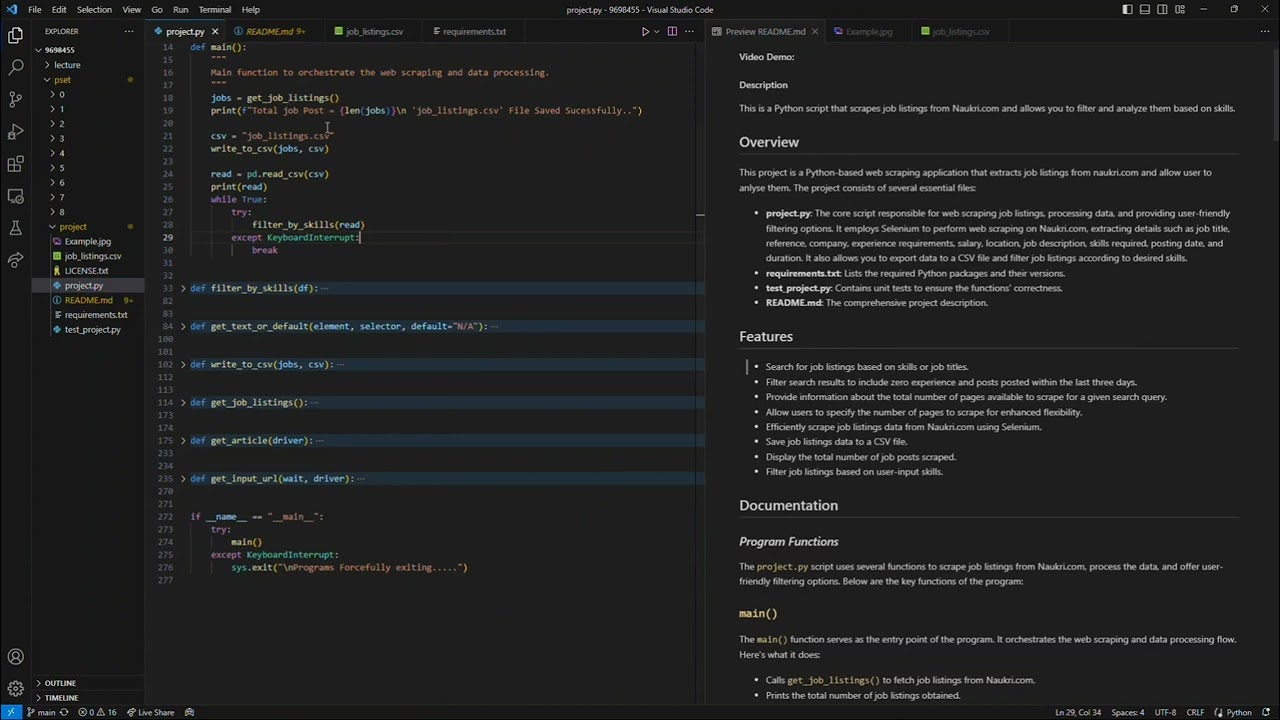

Overview¶

Naukri Webscraper is a Python-based automation tool that scrapes job listings from Naukri.com. It extracts job titles, companies, salaries, locations, and required skills, then filters results based on user-specified skills. The project outputs structured data as CSV files for further analysis.

The project was developed independently as a personal automation and data analysis initiative.

Recent updates:

- Added automated tests for core scraping and filtering logic (

test_project.py) usingpytest - Improved error handling and robustness in dynamic content extraction

- Updated documentation and usage instructions in

README.md

Goals¶

- Automate the retrieval and filtering of job listings from Naukri.com based on user-defined skills.

- Simplify and accelerate the job search process by eliminating manual filtering.

- Provide structured, exportable data for further analysis.

Responsibilities¶

- Designed and implemented the scraping logic using Selenium WebDriver.

- Developed skill-based filtering and CSV export using Pandas.

- Addressed dynamic content loading and missing data scenarios.

- Authored automated tests with

pytestto ensure code correctness and reliability. - Wrote comprehensive documentation and usage instructions.

Technologies Used¶

- Languages: Python (primary language for all scripts and logic)

- Frameworks/Libraries:

- Selenium (browser automation and web scraping)

- Pandas (data manipulation and CSV export)

- Testing:

- pytest (for automated tests in

test_project.py)

- pytest (for automated tests in

- DevOps/Tools:

- Git (version control)

- Chrome WebDriver (browser automation)

- Documentation:

- Markdown (

README.mdfor usage and setup)

- Markdown (

Tools

- Git (version control)

- Chrome WebDriver (browser automation)

- Markdown (project documentation)

- pytest (automated testing)

Process¶

- Planning:

- Identified key data fields (title, company, salary, location, skills) to extract from Naukri.com.

- Implementation:

- Used Selenium to automate browser actions and extract job data.

- Employed Pandas for data structuring and CSV export.

- Developed a filtering mechanism for user-specified skills.

- Testing:

- Created automated tests (

test_project.py) usingpytestto validate scraping and filtering logic.

- Created automated tests (

- Documentation:

- Documented setup, usage, and troubleshooting in

README.md.

- Documented setup, usage, and troubleshooting in

Recognition¶

I am proud to share that I have successfully completed the CS50P - Introduction to Programming with Python course.

Certificate¶

Challenges &

Challenges &  Solutions¶

Solutions¶

-

Dynamic Content Loading

Naukri.com uses JavaScript to render job listings, causing timing issues for scraping.

Used Selenium's

WebDriverWaitto ensure elements are loaded before extraction.

-

Complex HTML Structures

Extracting data from inconsistent or nested HTML elements.

Implemented a helper function (

get_text_or_default) for robust text extraction.

-

Performance Bottlenecks

Slow scraping due to large result sets and dynamic content.

Optimized data extraction loops and used efficient Pandas operations for filtering/export.

-

Testing Automation

Ensuring scraping logic remains reliable as site structure changes.

Developed automated tests with

pytestto validate core logic and catch regressions.

Note : On Site Changes and Locators

- The HTML structure and element locators (CSS selectors, XPaths) used in

project.pyare based on the current version of Naukri.com. - If the website updates its layout or class names, you may need to update these locators in the code to restore scraping functionality.

- Review and adjust selectors in

project.pyif you encounter errors or missing data after a site update.

Achievements¶

- Automated the extraction and filtering of job listings from Naukri.com.

- Enabled skill-based filtering and CSV export for downstream analysis.

- Developed a test suite (

test_project.py) usingpytestto ensure reliability. - Improved scraping robustness and error handling based on real-world site changes.

Key Learnings¶

- Gained practical experience with Selenium for dynamic web scraping.

- Enhanced skills in data manipulation and export using Pandas.

- Learned to write maintainable, testable code for web automation projects.

- Understood the importance of robust error handling and documentation.

- Applied

pytestfor effective and maintainable automated testing.

Outcomes¶

- Successfully automated job search and filtering for Naukri.com.

- Produced structured CSV datasets for analysis.

- Provided a reusable, documented tool for job seekers and data analysts.

- Ensured code reliability through automated testing with

pytest.

Visuals¶

Video Demo¶

Links¶

Conclusion¶

Naukri Webscraper demonstrates the power of Python automation for real-world data extraction and analysis. By combining Selenium and Pandas, the project streamlines job searches, enhances productivity, and provides actionable insights through structured data exports. The codebase is robust, tested with pytest, and well-documented for future use and extension.

AI Skill Assessment

Prompt1 Source

Strengths¶

-

Web Scraping Automation

- Demonstrates strong proficiency with Selenium for browser automation, including headless operation, custom user agents, and dynamic navigation (e.g., paginated scraping, handling search forms).

- Robust handling of web elements using both CSS selectors and XPaths, with fallback/default logic for missing elements.

-

Data Handling & Export

- Uses Pandas effectively for data manipulation and CSV export.

- Implements structured data extraction with a clear schema (job title, company, salary, skills, etc.).

-

Testing & Quality Assurance

- Provides automated tests using

pytest, including fixtures, mocking user input, and live web tests (with appropriate skips for anti-bot/site change issues). - Tests cover both core scraping logic and utility functions.

- Provides automated tests using

-

Documentation

- Comprehensive technical documentation (

doc.md) and user-facing README.md with clear instructions, schema definitions, troubleshooting, and usage examples. - Documents function purposes, parameters, and expected behaviors in code docstrings.

- Comprehensive technical documentation (

-

User Interaction & Error Handling

- Interactive CLI prompts for user input (search terms, page count, skill filters).

- Handles invalid input and exceptions gracefully (e.g.,

KeyboardInterrupt,ValueError, missing elements).

-

Project Structure & Packaging

- Uses pyproject.toml for dependency management and project metadata.

- Separates main logic, tests, and documentation cleanly.

Areas for Growth¶

-

Security & Anti-Bot Evasion

- No evidence of advanced anti-bot evasion techniques (e.g., proxy rotation, CAPTCHA handling, request throttling beyond simple sleep).

- No explicit handling of robots.txt or ethical scraping considerations in code.

-

Scalability & Performance

- Scraping is single-threaded and synchronous; not optimized for large-scale or parallel scraping.

- No batching, queuing, or distributed scraping logic.

-

CI/CD & DevOps

- No evidence of CI/CD pipelines, Dockerization, or deployment automation.

- No Makefile or scripts for environment setup/testing.

-

Code Modularity & Extensibility

- All logic is in a single script (

project.py); could benefit from modularization (e.g., separating scraping, filtering, and CLI logic). - No plugin or configuration system for adapting to site changes.

- All logic is in a single script (

-

Error Logging & Monitoring

- Uses print statements for errors; lacks structured logging or monitoring for production use.

-

GUI/UX

- No GUI or web interface; CLI-only interaction.

Role Suitability¶

Best Fit Roles¶

- Python Backend Developer

- Strong evidence of backend scripting, data processing, and automation skills.

- Web Scraping/Data Extraction Engineer

- Demonstrated expertise in Selenium, data extraction, and handling dynamic web content.

- QA Automation Engineer

- Experience with automated testing, mocking, and test-driven development using

pytest.

- Experience with automated testing, mocking, and test-driven development using

- Technical Writer/Documentation Specialist

- High-quality, thorough documentation and user guides.

Well-Suited For¶

- Data Analyst (with Python)

- Familiarity with Pandas and CSV data workflows.

- SDET (Software Development Engineer in Test)

- Automated test coverage and test design.

Less Suited For¶

- Frontend Developer

- No evidence of frontend/UI development (web or desktop).

- DevOps Engineer

- Lacks CI/CD, containerization, and deployment automation.

- Cloud/Distributed Systems Engineer

- No cloud integration, distributed scraping, or scalable architecture.

Summary:

The developer demonstrates strong skills in Python scripting, web scraping automation with Selenium, data processing with Pandas, and automated testing with pytest. The codebase is well-documented and user-friendly, with robust error handling and interactive CLI features. Areas for growth include modularization, scalability, advanced anti-bot techniques, and DevOps practices. The developer is best suited for backend, automation, and data extraction roles, and less suited for frontend or DevOps-focused positions based on the current codebase.

-

This

AI skill assessmentwas generated based on the skill-assessment-prompt.md and the provided project documentation. It is intended as an illustrative summary and should be interpreted in the context of the available code and documentation in codebase. ↩